The past two years have been challenging on many fronts for people and businesses alike. As a global community, we have been unable to travel long distances due to various restrictions, but that doesn’t mean we stop reaching for the stars.

During this same time frame, monumental advancements and achievements in space exploration were made — from the successful launch of the Inspiration 4 SpaceX, which transported four civilian astronauts to space, to NASA’s Lucy mission, launched to study Jupiter’s trojan asteroids in a quest for deeper knowledge of planetary origins and the formation of our solar system.

We also saw space exploration commandeered by many private companies with the successful launches of Rocket Labs and Virgin Orbit, while Space Perspective has started raising money for a balloon-based venture that will take paying customers to the stratosphere.

These advancements have astonished young and old alike and have given a new meaning to the space race. Actor William Shatner, best known for his role as Captain Kirk from “Star Trek,” recently made history as the oldest person to fly into space. The 90-year-old was one of four passengers aboard Blue Origin’s second human spaceflight.

With the “final frontier” now open to private companies, how do we transform the way we continually update the software that runs in space? The term “digital transformation” is nearly ubiquitous here on Earth, but it’s becoming increasingly clear that its concepts will also play a key support role in the next wave of space exploration.

Help TechCrunch find the best software consultants for startups.

Provide a recommendation in this quick survey and we’ll share the results with everybody.

Why DevOps in space?

One of the great revelations of the new space industry is that it’s being eaten alive by software. The ability for software to manage communication satellites and do what Starlink is doing — developing a low-latency internet system for consumers — is vital.

When you look at everything going on in the “new space” — watching Earth, traveling into deep space, the moon, Mars, etc. — all of these achievements wouldn’t be possible without software. Software is getting smarter, better and easier to update; however, the amount of compute power needed to execute software commands in space is growing exponentially.

Meanwhile, the cost of launching payloads into space is decreasing rapidly, particularly when compared to just five years ago. Currently, there are more than 2,000 functioning satellites in orbit, but planned constellations will add more than 40,000 satellites in the coming years. We’re going to see growing numbers of companies creating more evolved infrastructure faster in order to keep upgrading their satellites and constellations with more efficient and powerful software.

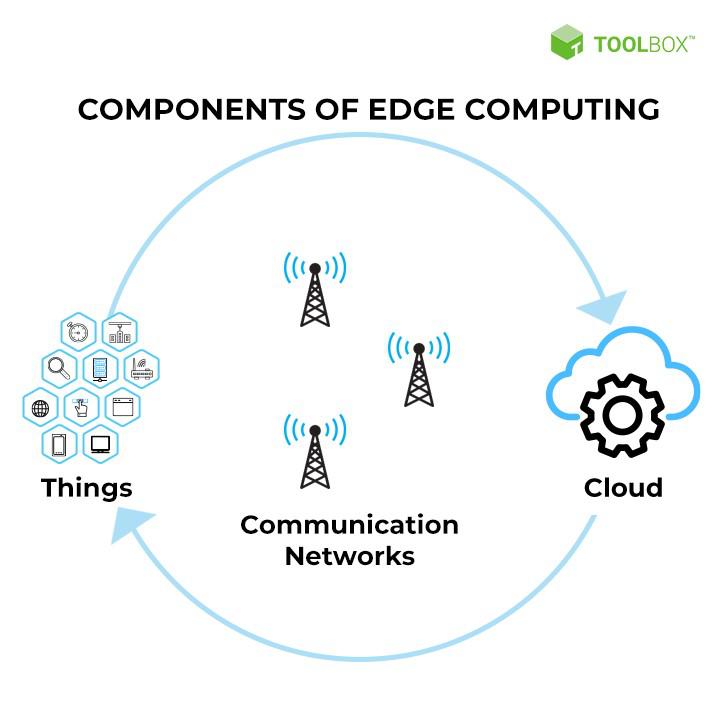

Like we see in other environments where edge computing is critical — automotive, energy/utilities, warehouses and last-mile retail delivery, to name just a few — companies that reliably, securely and continuously update their satellite software will have a huge advantage over the competition.

Release fast or risk crashing your satellites

One of the biggest technological pain points in space travel is power consumption. On Earth, we’re beginning to see more efficient CPU and memory, but in space, throwing away the heat of your CPU is quite hard, making power consumption the critical component. From hardware to software to the way you do processing, everything needs to account for power consumption.

On the flip side, in space, there is one thing that you have plenty of (obviously): space. This means that the size of physical hardware is less of a concern. Weight and power consumption are larger issues, because those factors also impact the way microchips and microprocessors are designed.

A great example of this can be found in the Ramon Space design. The company uses AI- and machine learning-powered processors to build space-resilient supercomputing systems with Earth-like computing capabilities, with the hardware components ultimately controlled by the software they have riding on top of them. The idea is to optimize the way software and hardware are utilized so applications can be developed and adapted in real time, just as they would be here on Earth.

Under this backdrop, the DevOps practices of coding, testing, validating, analyzing and distributing are roughly the same as they are on Earth, but the types of hardware, emulation, feedback loops and reliable testing of software are very different.

My personal view is that we need to create a new way of performing continuous delivery and continuous updates in space. On Earth, many organizations use an orchestrator to handle the continuous update processes — automated configuration, management and coordination of systems, apps and services to help IT teams manage complex tasks and workflows efficiently. As of now, there is no equivalent to this for use with satellites in space, and those that exist are extremely limiting.

For example, an orchestrator needs to send and control satellite updates from the ground, which create a high amount of risk when it comes to concerns such as data security.

Today’s space innovators need to think about a way to give satellites the ability to receive all of the information and data required to run updates correctly, as well as quickly and correctly recover from bad updates to ensure a valid set of binaries on the satellite. That said, I am optimistic that we’re at the onset of a revolution that will soon make the ability to efficiently deliver binaries to next-generation satellites a reality.

Boldly going where no person (or computing system) has gone before

When reflecting on the current space race, it’s hard not to think about “Star Trek” and how we as an industry and global community are boldly going where no one has gone before.

We’re continuing to adapt and change to the environment and challenges we face on Earth, and that is now extending to outer space. Shatner’s trip into space was emotional for the legendary actor; upon his return, he told Blue Origin and Amazon founder Jeff Bezos, “I’m so filled with emotion about what just happened. I just, it’s extraordinary, extraordinary. I hope I never recover from this.”

That raw human emotion is a product of the innovative technology that made the trip possible, as well as a curiosity and playful desire to push the boundaries.

Under this new paradigm, space has transformed from the “final frontier” to the next opportunity with nearly endless possibilities. In this sense, it’s a unique time to be alive, and I encourage my fellow DevOps peers across all industries to continue reaching for the stars — literally.