The final 4th Gen Sapphire Rapids-SP Xeon CPU with its multi-chiplet design housing Compute & HBM2e tiles. (Image Credits: CNET)

Intel has yet to announce a proper roadmap for its next-gen Xeon CPU roadmap & while they have outlined their next-gen products, we don't know much whereas AMD has offered early numbers regarding their EPYC 5nm lineup. So The Next Platform, with the help of their sources, and a touch of speculation, have made their own roadmap covering Intel's Xeon family up till Diamond Rapids.

Intel Next-Gen Xeon CPU Rumors Talk Emerald Rapids, Granite Rapids & Diamond Rapids: Up To 144 Lion Cove Cores By 2025

As a precautionary note, the specifications and information posted by TheNextPlatform are mostly estimates based on speculation and rumors along with hints from their sources. These are by no means specs that have been confirmed by Intel so take them with a grain of salt. However, they do provide us an insight on where Intel could head with their next-gen lineups.

AMD Ryzen 7000 CPUs Might Have An Advantage Over Intel’s Raptor Lake DDR5 Memory Capabilities As 5200 Mbps ‘Native’ Speeds Listed For 13th Gen

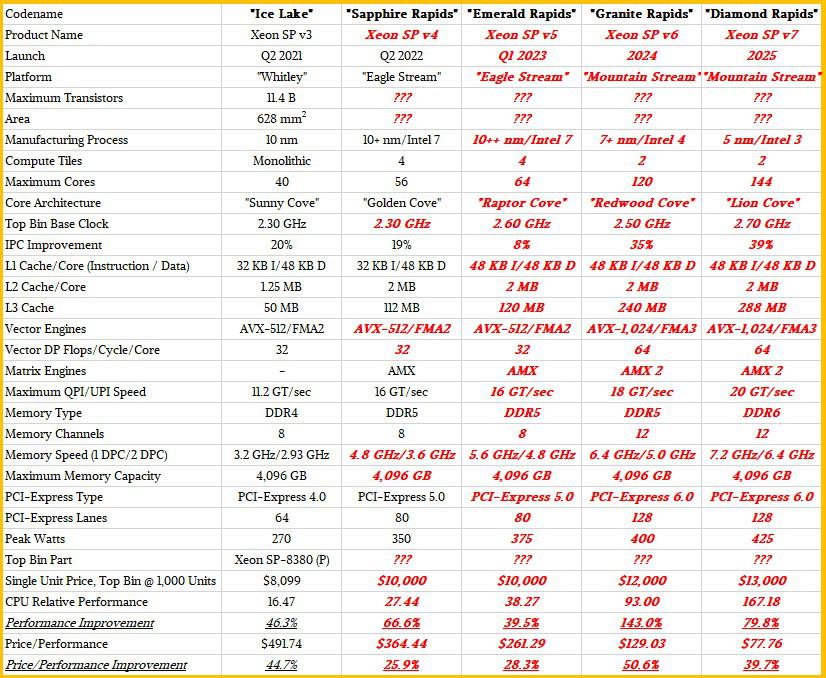

Intel Xeon CPU Family Generational Roadmap (Estimated / Credits: The Next Platform):

Intel Xeon CPU Family Generational IPC Uplift (Estimated / Credits: The Next Platform):

Intel Sapphire Rapids-SP 4th gen Xeon CPU Family

The Intel Sapphire Rapids-SP Xeon CPUs will be the first to introduce a multi-tile chiplet design as we detailed here. The SOC will pack the latest Golden Cove core architecture which is also going to power the Alder Lake lineup. The blue team plans to offer a maximum of 56 cores and 112 threads with up to 350W TDP. AMD on the other hand will be offering up to 96 cores and 192 threads at up to 400W TDP with its EPYC Genoa processors. AMD would also hold a big advantage when it comes to cache sizes, IO capabilities, & more (higher PCIe lanes, higher DDR5 capacities, higher L3 cache).

Intel adds Arc GPU, Rocky Linux, & multi-GPU functionality support to oneVPL 2022.1

The Sapphire Rapids-SP will come in two package variants, a standard, and an HBM configuration. The standard variant will feature a chiplet design composed of four XCC dies that will feature a die size of around 400mm2. This is the die size for a singular XCC die and there will be four in total on the top Sapphire Rapids-SP Xeon chip. Each die will be interconnected via EMIB which has a pitch size of 55u and a core pitch of 100u.

The standard Sapphire Rapids-SP Xeon chip will feature 10 EMIB interconnects and the entire package will measure at a mighty 4446mm2. Moving over to the HBM variant, we are getting an increased number of interconnects which sit at 14 and are needed to interconnect the HBM2E memory to the cores.

The four HBM2E memory packages will feature 8-Hi stacks so Intel is going for at least 16 GB of HBM2E memory per stack for a total of 64 GB across the Sapphire Rapids-SP package. Talking about the package, the HBM variant will measure at an insane 5700mm2 or 28% larger than the standard variant. Compared to the recently leaked EPYC Genoa numbers, the HBM2E package for Sapphire Rapids-SP would end up 5% larger while the standard package will be 22% smaller.

According to TheNextPlatform, the top SKU is expected to offer up to 2.3 GHz base clocks, 4 TB of DDR5 memory capacity support, 80 PCIe Gen 5.0 lanes, and a peak wattage of 350W. The Sapphire Rapids-SP Xeon lineup would be offering up to a 66% performance improvement over Ice Lake-SP chips and a 25.9% performance/price improvement.

Intel also states that the EMIB link provides twice the bandwidth density improvement and 4 times better power efficiency compared to standard package designs. Interestingly, Intel calls the latest Xeon lineup Logically monolithic which means that they are referring to the interconnect that'll offer the same functionality as a single-die would but technically, there are four chiplets that will be interconnected together. You can read the full details regarding the standard 56 core & 112 thread Sapphire Rapids-SP Xeon CPUs here.

AMD has really turned the tides with its Zen-powered EPYC CPUs within the server segment but it looks like Intel is planning to make a resurgence with its upcoming Xeon CPU families. The first of its course correction will be Emerald Rapids which is expected to launch in Q1 2023.

Intel Emerald Rapids-SP 5th Gen Xeon CPU Family

Intel's Emerald Rapids-SP Xeon CPU family is expected to be based upon a mature 'Intel 7' node. You can think of it as a 2nd Gen 'Intel 7' node which would lead to slightly higher efficiency.

Emerald Rapids is expected to make use of the Raptor Cove core architecture which is an optimized variant of the Golden Cove core that will deliver 5-10% IPC improvement over Golden Cove cores. It will also pack up to 64 cores and 128 threads which is a small core bump over the 56 cores and 112 threads featured on Sapphire Rapids chips.

The top part is expected to feature up to 2.6 GHz base clocks, 120 Mb of L3 cache, support for up to DDR5-5600 memory (up to 4 TB), and a slight increase in TDP to 375W. The performance is expected to increase by 39.5% over Sapphire Rapids and the performance/price is expected to go up by 28.3% versus Sapphire Rapids. But most of the increase to performance will be coming through clock and process optimizations on the enhanced Intel 7 (10ESF+) node.

It is reported that by the time Intel released Emerald Rapids-SP Xeon CPUs, AMD will already have released its Zen 4C powered EPYC Bergamo chips so the Xeon lineup may end up being too little & too late with only Intel's advanced instruction sets backing them up in niche workloads. A good thing for Emerald Rapids would be that it will remain compatible with the Eagle Stream platform (LGA 4677) and will offer increased PCIe lanes of up to 80 (Gen 5) and faster DDR5-5600 memory speeds.

Intel Granite Rapids-SP 6th Gen Xeon CPU Family

Moving over to Granite Rapids-SP, this is where Intel really starts to make some big changes to its lineup. As of right now, Intel has confirmed that its Granite Rapids-SP Xeon CPUs will be based on the 'Intel 4' process node (Formely 7nm EUV) but according to the leaked info, the placement for Granite Rapids is moving on roadmaps so we aren't exactly sure when the chips will actually come to market. A possibility is sometime between 2023 and 2024 as Emerald Rapids will be serving as an intermediary solution and not a proper Xeon family replacement.

It is stated that Granite Rapids-SP Xeon chips will utilize the Redwood Cove core architecture and feature increased core counts though the exact number is not provided. Intel did tease a high-level overview of its Granite Rapids-SP CPU during its 'Accelerated' keynote which seemed to feature several dies packaged in a single SOC through EMIB. We can see HBM packages along with high-bandwidth Rambo Cache packages. The Compute tile seems to be composed of 60 cores per die which equals 120 cores in total but we should expect a few of those cores to be disabled to get better yields on the new Intel 4 process node.

AMD will be increasing the core counts of its own Zen 4C EPYC lineup with Bergamo, pushing out up to 128 cores and 256 threads so, despite Intel doubling its core count, they still might not be able to match AMD's disruptive multi-threaded and multi-core lead. But in terms of IPC, this is where Intel might start getting close to AMD's Zen architecture in the server segment and back in the game. The CPU is said to feature up to 128 PCIe Gen 6.0 lanes and a TDP of up to 400W.The CPUs will also be able to utilize up to 12-Channel DDR5 memory with speeds of up to DDR5-6400. The performance increase over Emerald Rapids will almost double due to double the core counts and an enhanced core architecture while overall performance/price is expected to go up by 50%.

One interesting feature mentioned by TheNextPlatform is that starting Granite Rapids, Intel Xeon CPUs will adopt the latest AVX-1024/FMA3 vector engines for increased performance in a various range of workloads. Though that would mean that the power numbers using these instructions are going to go up tremendously. The Granite Rapids and future generation of Xeon CPUs are going to be compatible with a new 'Mountain Stream' platform.

Intel Diamond Rapids-SP 7th Gen Xeon CPU Family

Come Diamond Rapids-SP and Intel might finally have its big win against AMD since its first EPYC launch back in 2017. Diamond Rapids Xeon CPUs are touted as 'The Big One' in the leak and are expected to launch by 2025 with a radically new architecture that will be positioned against Zen 5. The EPYC Turin lineup based on Zen 5 won't be coming slowly as AMD will be aware of Intel planning a comeback in the data center and server segment. So far, there are no details available as for what architecture or core count the new chips will offer but they will offer compatibility on the same Birch Stream and Mountain Stream platforms that will also support Granite Rapids-SP chips.

The Diamond Rapids 7th Gen Xeon CPUs are expected to feature the enhanced Lion Cove cores on the Intel 3 (5nm) process node and offer up to 144 cores and 288 threads. The clock speeds will see a gradual increase from 2.5 to 2.7 GHz (preliminary). As for IPC improvement, the Diamond Rapids chips are expe ted to offer up to 39% uplift over Granite Rapids. Overall performance is expected to improve by 80% while performance/price is expected to improve by 40%. For the platform itself, the chips are expected to offer up to 128 PCIe Gen 6.0 lanes, DDR6-7200 memory support, and up to 288 MB of L3 cache.

The Diamond Rapids-SP lineup isn't expected by 2025 so it's still far off. There's also Sierra Forest mentioned which is not a successor but a variation of the Diamond Rapid-SP Xeon lineup that will be aimed at certain customers like AMD's Bergamo or the HBM variants of Sapphire Rapids-SP. It will definitely come after Diamond Rapids-SP by 2026.

Intel Xeon SP Families (Preliminary):

| Family Branding | Skylake-SP | Cascade Lake-SP/AP | Cooper Lake-SP | Ice Lake-SP | Sapphire Rapids | Emerald Rapids | Granite Rapids | Diamond Rapids |

|---|---|---|---|---|---|---|---|---|

| Process Node | 14nm+ | 14nm++ | 14nm++ | 10nm+ | Intel 7 | Intel 7 | Intel 3 | Intel 3? |

| Platform Name | Intel Purley | Intel Purley | Intel Cedar Island | Intel Whitley | Intel Eagle Stream | Intel Eagle Stream | Intel Mountain Stream Intel Birch Stream | Intel Mountain Stream Intel Birch Stream |

| Core Architecture | Skylake | Cascade Lake | Cascade Lake | Sunny Cove | Golden Cove | Raptor Cove | Redwood Cove? | Lion Cove? |

| IPC Improvement (Vs Prev Gen) | 10% | 0% | 0% | 20% | 19% | 8%? | 35%? | 39%? |

| MCP (Multi-Chip Package) SKUs | No | Yes | No | No | Yes | Yes | TBD (Possibly Yes) | TBD (Possibly Yes) |

| Socket | LGA 3647 | LGA 3647 | LGA 4189 | LGA 4189 | LGA 4677 | LGA 4677 | TBD | TBD |

| Max Core Count | Up To 28 | Up To 28 | Up To 28 | Up To 40 | Up To 56 | Up To 64? | Up To 120? | Up To 144? |

| Max Thread Count | Up To 56 | Up To 56 | Up To 56 | Up To 80 | Up To 112 | Up To 128? | Up To 240? | Up To 288? |

| Max L3 Cache | 38.5 MB L3 | 38.5 MB L3 | 38.5 MB L3 | 60 MB L3 | 105 MB L3 | 120 MB L3? | 240 MB L3? | 288 MB L3? |

| Vector Engines | AVX-512/FMA2 | AVX-512/FMA2 | AVX-512/FMA2 | AVX-512/FMA2 | AVX-512/FMA2 | AVX-512/FMA2 | AVX-1024/FMA3? | AVX-1024/FMA3? |

| Memory Support | DDR4-2666 6-Channel | DDR4-2933 6-Channel | Up To 6-Channel DDR4-3200 | Up To 8-Channel DDR4-3200 | Up To 8-Channel DDR5-4800 | Up To 8-Channel DDR5-5600? | Up To 12-Channel DDR5-6400? | Up To 12-Channel DDR6-7200? |

| PCIe Gen Support | PCIe 3.0 (48 Lanes) | PCIe 3.0 (48 Lanes) | PCIe 3.0 (48 Lanes) | PCIe 4.0 (64 Lanes) | PCIe 5.0 (80 lanes) | PCIe 5.0 (80 Lanes) | PCIe 6.0 (128 Lanes)? | PCIe 6.0 (128 Lanes)? |

| TDP Range (PL1) | 140W-205W | 165W-205W | 150W-250W | 105-270W | Up To 350W | Up To 375W? | Up To 400W? | Up To 425W? |

| 3D Xpoint Optane DIMM | N/A | Apache Pass | Barlow Pass | Barlow Pass | Crow Pass | Crow Pass? | Donahue Pass? | Donahue Pass? |

| Competition | AMD EPYC Naples 14nm | AMD EPYC Rome 7nm | AMD EPYC Rome 7nm | AMD EPYC Milan 7nm+ | AMD EPYC Genoa ~5nm | AMD Next-Gen EPYC (Post Genoa) | AMD Next-Gen EPYC (Post Genoa) | AMD Next-Gen EPYC (Post Genoa) |

| Launch | 2017 | 2018 | 2020 | 2021 | 2022 | 2023? | 2024? | 2025? |

Next-Gen Intel Xeon vs AMD EPYC Generational CPU Comparison (Preliminary):

| CPU Name | Process Node / Architecture | Cores / Threads | Cache | DDR Memory / Speed / Capacities | PCIe Gen / Lanes | TDPs | Platform | Launch |

|---|---|---|---|---|---|---|---|---|

| Intel Diamond Rapids | Intel 3 / Lion Cove? | 144 / 288? | 288 MB L3? | DDR6-7200 / 4 TB? | PCIe Gen 6.0 / 128? | Up To 425W | Mountain Stream | 2025? |

| AMD EPYC Turin | 3nm / Zen 5 | 256 / 512? | 1024 MB L3? | DDR5-6000 / 8 TB? | PCIe Gen 6.0 / TBD | Up To 600W | SP5 | 2024-2025? |

| Intel Granite Rapids | Intel 4 / Redwood Cove | 120 / 240 | 240 MB L3? | DDR5-6400 / 4 TB? | PCIe Gen 6.0 / 128? | Up To 400W | Mountain Stream | 2024? |

| AMD EPYC Bergamo | 5nm / Zen 4C | 128 / 256 | 512 MB L3? | DDR5-5600 / 6 TB? | PCIe Gen 5.0 / TBD? | Up To 400W | SP5 | 2023 |

| Intel Emerald Rapids | Intel 7 / Raptor Cove | 64 / 128? | 120 MB L3? | DDR5-5200 / 4 TB? | PCIe Gen 5.0 / 80 | Up To 375W | Eagle Stream | 2023 |

| AMD EPYC Genoa | 5nm / Zen 4 | 96 / 192 | 384 MB L3? | DDR5-5200 / 4 TB? | PCIe Gen 5.0 / 128 | Up To 400W | SP5 | 2022 |

| Intel sapphire Rapids | Intel 7 / Golden Cove | 56 / 112 | 105 MB L3 | DDR5-4800 / 4 TB | PCIe Gen 5.0 / 80 | Up To 350W | Eagle Stream | 2022 |