I couldn't help but notice that in the CXL presentation Intel gave today lies a hint about the future of its Xe GPU ambitions. While it was not explicitly stated anywhere - it seems to have a pretty obvious implication to me; time to coin a term: Coherent Multi-GPU. CXL is Intel's new interconnect layer that is designed to solve a lot of issues with the PCIe protocol and one of the major reasons why Multi-GPU never took off properly is due to the lack of - you guessed it - coherency. I think it is very likely that we will be seeing Xe GPUs running in "CXL Mode" in the future.

Intel CXL in a nutshell: Heterogenous compute protocol for scaling processors over PCIe Gen 5 and beyond

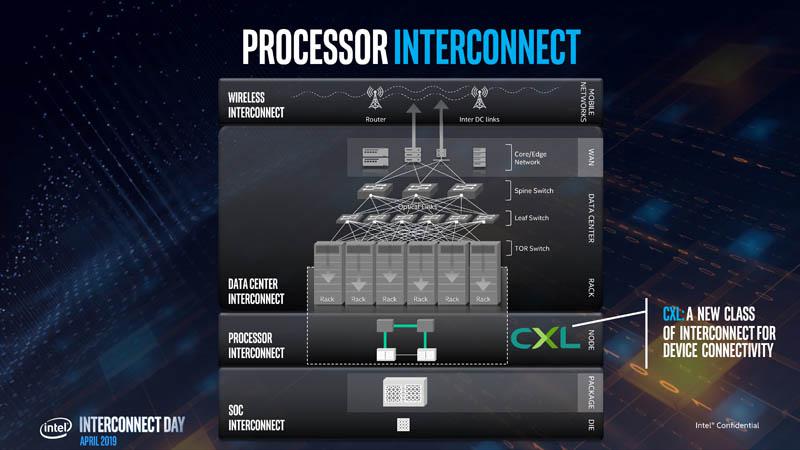

Intel discussed its brand new processor-to-processor interconnect, called the Compute Express Link (CXL), in detail at the 'Interconnect Day 2019' event yesterday. Whereas we have talked in depth about data centre interconnects before, this particular one works to connect devices across the physical PCIe port. The reason why I mention physical and not just PCIe is because while Intel CXL is designed to work over the physical PCIe port to ensure universal compatibility - it will not utilize the PCIe protocol but instead act as an alternative protocol to the same; one that is far more future proof and scalable than its archaic (soon-to-be) predecessor.

Rolling out significant capability upgrades over existing ports is very impressive in today's ecosystem and what Intel is claiming isn't just a small capability improvement - its a massive one. The first generation CXL is designed to work over PCIe Gen 5 (so it is still a few years away) and is expected to accelerate the time to PCIe 6. We have had PCIe 3 for almost 8 years now and Intel is getting ready to shorten the industry upgrade cycle for this standard. The switch between PCIe and CXL protocols will be completely seamless.

How Intel CXL solves traditional PCIe Multi-GPU problems

The presentation Intel gave today on CXL was focused on the data centre aspect of it all, but we see a far more interesting angle for our reader base - one that the company did not explicitly state but is fairly obvious once you think about it. While CXL is pitched as the ultimate scaling fabric to glue together CPUs and accelerators there is no reason it cannot be used to achieve a finally-decent implementation of multi-GPU. This is what I will be focusing on in my coverage of CXL. Let's call it: coherent multi-GPU.

One of the primary issues with PCIe scaling right now is that any device connected through it has an isolated memory pool, high latency in terms of processor-to-processor communication and a lack of coherency throughout the system. This is why companies like NVIDIA and AMD have historically had to innovate on the software side with all sort of multi-GPU techniques like split frame rendering or sequential rendering. The multiple GPUs did not act like a coherent whole - they acted independently and it showed. CXL aims to fix all that.

With Intel CXL, a coherent memory pool can be created and the latency reduced by an order of magnitude. The whole system will act as a cohesive whole and will scale significantly better. This means that if you have a system running an Intel Xe dGPU, you can throw in another one in a spare PCIe slot and it should scale seamlessly. There are three protocols that CXL introduces:

CXL.io, which is an IO protocol, replaces PCIe, for discovery, configuration, register access, interrupts etc. Then you have CXL.cache and CXL.memory, which are the ones we are primarily interested in. These protocols allow the connected devices to access memory and cache in a coherent fashion. On paper, this is designed for the CPU to access the accelerator vRAM and for the accelerator to access the CPU cache but I see no reason why these protocols should not also allow multiple GPUs to access each other's memory buffers.

You know the pesky limitation of multi-GPU where the VRAM never added up? Well, CXL.memory and CXL.cache in conjunction should solve that. Intel has also stated that unlike other interconnects, CXL is designed for low latency - which is perfect for the multi-GPU approach. It is unclear at this time whether we can implement timing through CXL as well or whether that would still require 'syncing' connections like SLI bridges.

Intel's CXL protocol is also asymmetric, which allows the system to not only accept accelerators (read: GPUs) but also allows coherent memory buffers as well as protocol inoperability. Not only that but this is a vastly more open standard - allowing non-Intel processors to adopt the protocol - which is key if Intel wants wide acceptance of this protocol. Coherency bias in the CXL protocol, unlike traditional protocols, means that the GPU in question does not have to stop at the processor while accessing memory, it can do so on its own, alleviating driver latency.

Of course, GPUs aside for a second, this approach is primarily being pitched for a data centre configuration so multi-socket setups and CCIX is what this is targetting from the get-go. Because of that, there is a host bias flow as well - so if you really want to - you can still use the older flow. Essentially, Intel is trying to create a heteregenous computing infrastructure that allows CPUs, GPUs, accelerators, FPGAs and pretty much anything that can go on a PCIe port to be glued togather as a cohesive whole.

The future: Intel Xe GPUs in CXL Mode?

Intel has been pretty tightlipped about details of its Xe GPU ambitions. All we know is that it will be scalable. It is a fairly good bet that multi-GPU is going to be involved in some way. Either through CXL or through an MCM implementation or even both! It is also anyone's guess whether CXL mode for GPUs will make its way to the mainstream consumer segment for enthusiasts like us - but it is very much clear that it solves a lot of problems that originally made multi-GPU setups unfeasible.

It has been a long time since innovation has happened in the software stack and protocols that control the flow of data from the GPU to the CPU and it would be great to see this trickle down to the mainstream consumer level. If Intel starts out with a singular Xe GPU, CXL mode can give it the edge to compete with higher-end variants from AMD and NVIDIA - and change the name of the game completely in the process.

If CXL can seamlessly scale GPUs, then the economics of the market would also change completely. People would be able to buy a cheaper GPU first and then simply add another one if they want more power. It would add much more flexibility in buying decisions and even alleviate buyers remorse to some extent for the gaming class. If CXL mode trickles down to the consumer level anytime soon, then we might even see motherboard designs change drastically as multiple sockets and multiple GPUs become a feasible option. Needless to say, it looks like things are going to get pretty exciting in a few years.