Intel Sapphire Rapids-SP Xeon CPU Die Shot (Image Credits:(@CarstenSpille)

A huge range of Intel Sapphire Rapids-SP Xeon CPUs has been detailed in regards to their specs and positioning on the server platform. The specs were shared by YuuKi_AnS & include 23 SKUs that will be part of the family later this year.

Intel Sapphire Rapids-SP Xeon CPU Lineup Specs & Tiers Detailed, At Least 23 SKUs In The Works

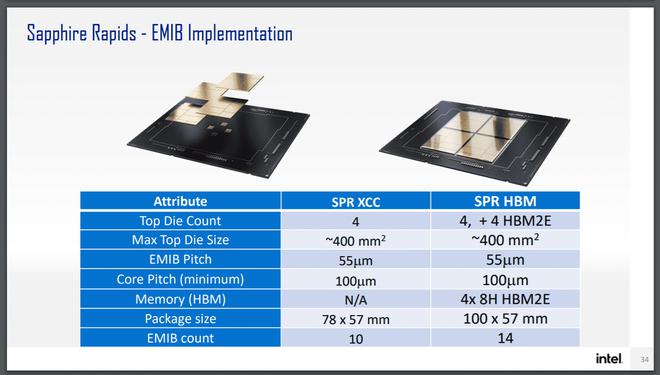

The Sapphire Rapids-SP family will be replacing the Ice Lake-SP family and will go all on board with the 'Intel 7' process node (formerly 10nm Enhanced SuperFin) that will be making its formal debut later this year in the Alder Lake consumer family. The server lineup will feature the performance-optimized Golden Cove core architecture which delivers a 20% IPC improvement over Willow Cove core architecture. Several cores are featured on multiple tiles and packaged together through the use of EMIB.

AMD Ryzen 7000 CPUs Might Have An Advantage Over Intel’s Raptor Lake DDR5 Memory Capabilities As 5200 Mbps ‘Native’ Speeds Listed For 13th Gen

Sandra Rivera, executive vice president and general manager of the Datacenter and AI Group at Intel Corporation, displays a wafer holding 4th Gen Intel Xeon Scalable processors (code-named Sapphire Rapids) before the opening of Intel Vision 2022 on May 10 in Dallas. During the hybrid event, Intel’s leaders will announce advancements across silicon, software and services, showcasing how Intel brings together technologies and the ecosystem to unlock business value for customers today and in the future. (Credit: Walden Kirsch/Intel Corporation)Intel Sapphire Rapids-SP 'Vanilla Xeon' CPUs:

For Sapphire Rapids-SP, Intel is using a quad multi-tile chiplet design which will come in HBM and non-HBM flavors. While each tile is its own unit, the chip itself acts as one singular SOC and each thread has full access to all resources on all tiles, consistently providing low-latency & high cross-section bandwidth across the entire SOC.

We have already taken an in-depth look at the P-Core over here but some of the key changes that will be offered to the data center platform will include AMX, AiA, FP16, and CLDEMOTE capabilities. The Accelerator Engines will increase the effectiveness of each core by offloading common-mode tasks to these dedicated accelerator engines which will increase performance & decrease the time taken to achieve the necessary task.

In terms of I/O advancements, Sapphire Rapids-SP Xeon CPUs will introduce CXL 1.1 for accelerator and memory expansion in the data center segment. There's also an improved multi-socket scaling via Intel UPI, delivering up to 4 x24 UPI links at 16 GT/s and a new 8S-4UPI performance-optimized topology. The new tile architecture design also boosts the cache beyond 100 MB along with Optane Persistent Memory 300 series support.

Intel Sapphire Rapids-SP 'HBM Xeon' CPUs:

Intel has also detailed its Sapphire Rapids-SP Xeon CPUs with HBM memory. From what Intel has shown, their Xeon CPUs will house up to four HBM packages, all offering significantly higher DRAM bandwidth versus a baseline Sapphire Rapids-SP Xeon CPU with 8-channel DDR5 memory. This is going to allow Intel to offer a chip with both increased capacity and bandwidth for customers that demand it. The HBM SKUs can be used in two modes, an HBM Flat mode & an HBM caching mode.

The standard Sapphire Rapids-SP Xeon chip will feature 10 EMIB interconnects and the entire package will measure at a mighty 4446mm2. Moving over to the HBM variant, we are getting an increased number of interconnects which sit at 14 and are needed to interconnect the HBM2E memory to the cores.

Intel adds Arc GPU, Rocky Linux, & multi-GPU functionality support to oneVPL 2022.1

The four HBM2E memory packages will feature 8-Hi stacks so Intel is going for at least 16 GB of HBM2E memory per stack for a total of 64 GB across the Sapphire Rapids-SP package. Talking about the package, the HBM variant will measure at an insane 5700mm2 or 28% larger than the standard variant. Compared to the recently leaked EPYC Genoa numbers, the HBM2E package for Sapphire Rapids-SP would end up 5% larger while the standard package will be 22% smaller.

Intel Sapphire Rapids-SP Xeon CPU Platform

The Sapphire Rapids lineup will make use of 8-channel DDR5 memory with speeds of up to 4800 Mbps & support PCIe Gen 5.0 on the Eagle Stream platform (C740 chipset).

The Eagle Stream platform will also introduce the LGA 4677 socket which will be replacing the LGA 4189 socket for Intel's upcoming Cedar Island & Whitley platform which would house Cooper Lake-SP and Ice Lake-SP processors, respectively. The Intel Sapphire Rapids-SP Xeon CPUs will also come with CXL 1.1 interconnect that will mark a huge milestone for the blue team in the server segment.

The final 4th Gen Sapphire Rapids-SP Xeon CPU with its multi-chiplet design housing Compute & HBM2e tiles. (Image Credits: CNET)Coming to the configurations, the top part is started to feature 60 cores with a TDP of 350W. What is interesting about this configuration is that it is listed as a low-bin split variant which means that it will be using a tile or MCM design. The Sapphire Rapids-SP Xeon CPU will be composed of a 4-tile layout with each tile featuring 14 cores.

Following are the expected configurations:

Now based on the specifications provided by YuuKi_AnS, the Intel Sapphire Rapids-SP Xeon CPUs will come in four tiers:

The TDPs listed here are at PL1 rating so the PL2 rating, as seen earlier, is going to be very high in the 400W+ range and the BIOS limit is expected to hover at around 700W+. Most of the CPU SKUs listed by the leaker is still in ES1/ES2 state which means that they are far from the final retail chip but the core configurations are likely to remain the same. Intel will offer various SKUs with same but different bins, affecting their clocks/TDPs. For example, there are four 44 core parts with 82.5 MB cache listed but clock speeds should vary across each SKU. There's also one Sapphire Rapids-SP HBM 'Gold' CPU in its A0 revision which has 48 cores, 96 threads, and 90 MB of cache with a TDP of 350W. There's also the 60 core part that leaked a while back but it isn't listed yet. Following is the entire SKU list that has been leaked:

Intel Sapphire Rapids-SP Xeon CPU SKUs List (Preliminary):

| QSPEC | Tier | Revision | Cores/Threads | L3 Cache | Clocks | TDP | Variant |

|---|---|---|---|---|---|---|---|

| QY36 | Platinum | C2 | 56/112 | 105 MB | N/A | 350W | ES2 |

| QXQH | Platinum | C2 | 56/112 | 105 MB | 1.6 GHz - N/A | 350W | ES1 |

| N/A | Platinum | B0 | 48/96 | 90.0 MB | 1.3 GHz - N/A | 350W | ES1 |

| QXQG | Platinum | C2 | 40/80 | 75.0 MB | 1.3 GHz - N/A | 300W | ES1 |

| QYGJ | Gold | A0 (HBM) | 48/96 | 90 MB | N/A | 350W | ES0/1 |

| QWAB | Gold | N/A | 44/88 | N/A | 1.4 GHz | N/A | TBC |

| QXPQ | Gold | C2 | 44/88 | 82.5 MB | N/A | 270W | ES1 |

| QXPH | Gold | C2 | 44/88 | 82.5 MB | N/A | 270W | ES1 |

| QXP4 | Gold | C2 | 44/88 | 82.5 MB | N/A | 270W | ES1 |

| N/A | Gold | B0 | 28/56 | 52.5 MB | 1.3 GHz - N/A | 270W | ES1 |

| QY0E (E127) | Gold | N/A | N/A | N/A | 2.2 GHz | N/A | TBC |

| QVV5 (C045) | Silver | A2 | 28/56 | 52.5 MB | N/A | 250W | ES1 |

| QXPM | Silver | C2 | 24/48 | 45.0 MB | 1.5 GHz - N/A | 225W | ES1 |

| QXLX (J115) | N/A | C2 | N/A | N/A | N/A | N/A | TBC |

| QWP6 (J105) | N/A | B0 | N/A | N/A | N/A | N/A | TBC |

| QWP3 (J048) | N/A | B0 | N/A | N/A | N/A | N/A | ES1 |

Once again, most of these configurations aren't in the final spec since they are still early samples. The parts in red with A/B/C stepping are said to be unusable and can only be used with a special BIOS which still has a lot of bugs. This list does provide us with an idea of what to expect in terms of SKUs and tiers but we will have to wait for the official announcement later this year for accurate specs for each SKU.

It looks like AMD will still hold the upper hand in the number of cores & threads offered per CPU with their Genoa chips pushing for up to 96 cores whereas Intel Xeon chips would max out at 60 cores if they don't plan on making SKUs with a higher number of tiles. Intel will have a wider and more expandable platform that can support up to 8 CPUs at once so unless Genoa offers more than 2P (dual-socket) configurations, Intel will have the lead in the most number of cores per rack with an 8S rack packing up to 480 cores and 960 threads.

Recently, Intel announced during its Vision event that the company is shipping its initial Sapphire-Rapids-SP Xeon SKUs to customers and are on track for a Q4 2022 launch.

Intel Xeon SP Families (Preliminary):

| Family Branding | Skylake-SP | Cascade Lake-SP/AP | Cooper Lake-SP | Ice Lake-SP | Sapphire Rapids | Emerald Rapids | Granite Rapids | Diamond Rapids |

|---|---|---|---|---|---|---|---|---|

| Process Node | 14nm+ | 14nm++ | 14nm++ | 10nm+ | Intel 7 | Intel 7 | Intel 3 | Intel 3? |

| Platform Name | Intel Purley | Intel Purley | Intel Cedar Island | Intel Whitley | Intel Eagle Stream | Intel Eagle Stream | Intel Mountain Stream Intel Birch Stream | Intel Mountain Stream Intel Birch Stream |

| Core Architecture | Skylake | Cascade Lake | Cascade Lake | Sunny Cove | Golden Cove | Raptor Cove | Redwood Cove? | Lion Cove? |

| IPC Improvement (Vs Prev Gen) | 10% | 0% | 0% | 20% | 19% | 8%? | 35%? | 39%? |

| MCP (Multi-Chip Package) SKUs | No | Yes | No | No | Yes | Yes | TBD (Possibly Yes) | TBD (Possibly Yes) |

| Socket | LGA 3647 | LGA 3647 | LGA 4189 | LGA 4189 | LGA 4677 | LGA 4677 | TBD | TBD |

| Max Core Count | Up To 28 | Up To 28 | Up To 28 | Up To 40 | Up To 56 | Up To 64? | Up To 120? | Up To 144? |

| Max Thread Count | Up To 56 | Up To 56 | Up To 56 | Up To 80 | Up To 112 | Up To 128? | Up To 240? | Up To 288? |

| Max L3 Cache | 38.5 MB L3 | 38.5 MB L3 | 38.5 MB L3 | 60 MB L3 | 105 MB L3 | 120 MB L3? | 240 MB L3? | 288 MB L3? |

| Vector Engines | AVX-512/FMA2 | AVX-512/FMA2 | AVX-512/FMA2 | AVX-512/FMA2 | AVX-512/FMA2 | AVX-512/FMA2 | AVX-1024/FMA3? | AVX-1024/FMA3? |

| Memory Support | DDR4-2666 6-Channel | DDR4-2933 6-Channel | Up To 6-Channel DDR4-3200 | Up To 8-Channel DDR4-3200 | Up To 8-Channel DDR5-4800 | Up To 8-Channel DDR5-5600? | Up To 12-Channel DDR5-6400? | Up To 12-Channel DDR6-7200? |

| PCIe Gen Support | PCIe 3.0 (48 Lanes) | PCIe 3.0 (48 Lanes) | PCIe 3.0 (48 Lanes) | PCIe 4.0 (64 Lanes) | PCIe 5.0 (80 lanes) | PCIe 5.0 (80 Lanes) | PCIe 6.0 (128 Lanes)? | PCIe 6.0 (128 Lanes)? |

| TDP Range (PL1) | 140W-205W | 165W-205W | 150W-250W | 105-270W | Up To 350W | Up To 375W? | Up To 400W? | Up To 425W? |

| 3D Xpoint Optane DIMM | N/A | Apache Pass | Barlow Pass | Barlow Pass | Crow Pass | Crow Pass? | Donahue Pass? | Donahue Pass? |

| Competition | AMD EPYC Naples 14nm | AMD EPYC Rome 7nm | AMD EPYC Rome 7nm | AMD EPYC Milan 7nm+ | AMD EPYC Genoa ~5nm | AMD Next-Gen EPYC (Post Genoa) | AMD Next-Gen EPYC (Post Genoa) | AMD Next-Gen EPYC (Post Genoa) |

| Launch | 2017 | 2018 | 2020 | 2021 | 2022 | 2023? | 2024? | 2025? |