We are excited to bring Transform 2022 back in-person July 19 and virtually July 20 - 28. Join AI and data leaders for insightful talks and exciting networking opportunities. Register today!

By 2025, 85% of enterprises will have a cloud-first principle — a more efficient way to host data rather than on-premises. The shift to cloud computing amplified by COVID-19 and remote work has meant a whole host of benefits for companies: lower IT costs, increased efficiency and reliable security.

With this trend continuing to boom, the threat of service disruptions and outages is also growing. Cloud providers are highly reliable, but they are “not immune to failure.” In December 2021, Amazon reported seeing multiple Amazon Web Services (AWS) APIs affected, and, within minutes, many widely used websites went down.

So, how can companies mitigate cloud risk, prepare themselves for the next AWS shortage and accommodate sudden spikes of demand?

The answer is scalability and elasticity — two essential aspects of cloud computing that greatly benefit businesses. Let’s talk about the differences between scalability and elasticity and see how they can be built at cloud infrastructure, application and database levels.

Understand the difference between scalability and elasticity

Both scalability and elasticity are related to the number of requests that can be made concurrently in a cloud system — they are not mutually exclusive; both may have to be supported separately.

Scalability is the ability of a system to remain responsive as the number of users and traffic gradually increases over time. Therefore, it is long-term growth that is strategically planned. Most B2B and B2C applications that gain usage will require this to ensure reliability, high performance and uptime.

With a few minor configuration changes and button clicks, in a matter of minutes, a company could scale their cloud system up or down with ease. In many cases, this can be automated by cloud platforms with scale factors applied at the server, cluster and network levels, reducing engineering labor expenses.

Elasticity is the ability of a system to remain responsive during short-term bursts or high instantaneous spikes in load. Some examples of systems that regularly face elasticity issues include NFL ticketing applications, auction systems and insurance companies during natural disasters. In 2020, the NFL was able to lean on AWS to livestream its virtual draft, when it needed far more cloud capacity.

A business that experiences unpredictable workloads but doesn’t want a preplanned scaling strategy might seek an elastic solution in the public cloud, with lower maintenance costs. This would be managed by a third-party provider and shared with multiple organizations using the public internet.

So, does your business have predictable workloads, highly variable ones, or both?

Work out scaling options with cloud infrastructure

When it comes to scalability, businesses must watch out for over-provisioning or under-provisioning. This happens when tech teams don’t provide quantitative metrics around the resource requirements for applications or the back-end idea of scaling is not aligned with business goals. To determine a right-sized solution, ongoing performance testing is essential.

Business leaders reading this must speak to their tech teams to find out how they discover their cloud provisioning schematics. IT teams should be continually measuring response time, the number of requests, CPU load and memory usage to watch the cost of goods (COG) associated with cloud expenses.

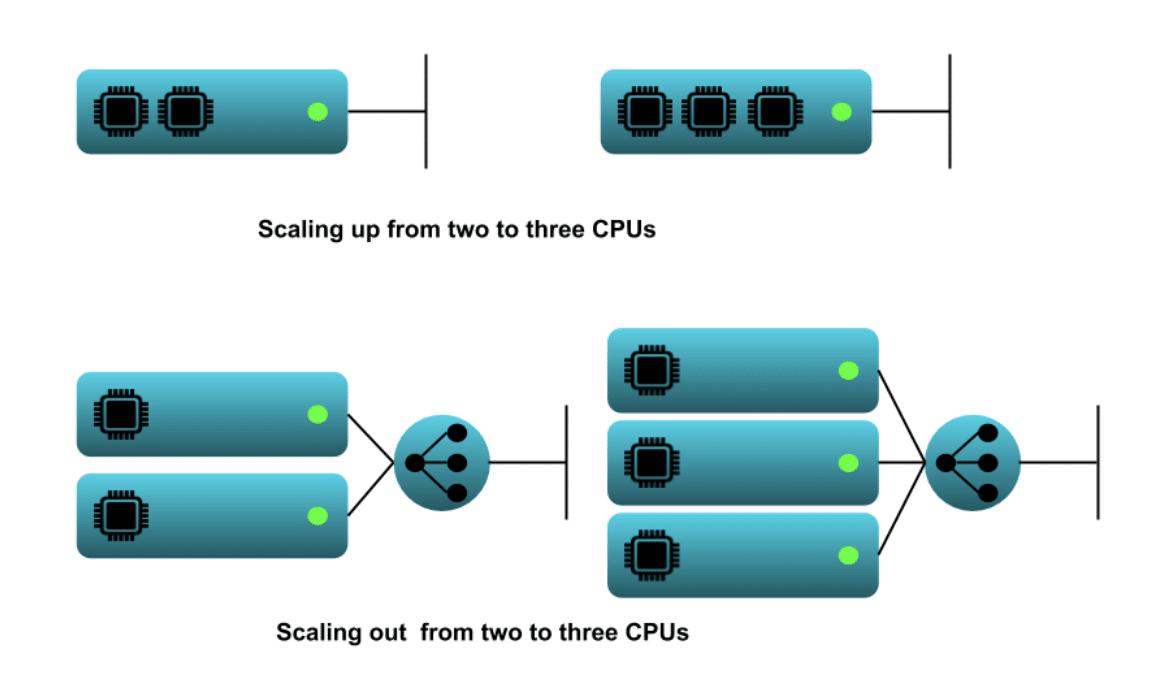

There are various scaling techniques available to organizations based on business needs and technical constraints. So, will you scale up or out?

Vertical scaling involves scaling up or down and is used for applications that are monolithic, often built prior to 2017, and may be difficult to refactor. It involves adding more resources such as RAM or processing power (CPU) to your existing server when you have an increased workload, but this means scaling has a limit based on the capacity of the server. It requires no application architecture changes as you are moving the same application, files and database to a larger machine.

Horizontal scaling involves scaling in or out and adding more servers to the original cloud infrastructure to work as a single system. Each server needs to be independent so that servers can be added or removed separately. It entails many architectural and design considerations around load-balancing, session management, caching and communication. Migrating legacy (or outdated) applications that are not designed for distributed computing must be refactored carefully. Horizontal scaling is especially important for businesses with high availability services requiring minimal downtime and high performance, storage and memory.

If you are unsure which scaling technique better suits your company, you may need to consider a third-party cloud engineering automation platform to help manage your scaling needs, goals and implementation.

Weigh up how application architectures affect scalability and elasticity

Let’s take a simple healthcare application – which applies to many other industries, too – to see how it can be developed across different architectures and how that impacts scalability and elasticity. Healthcare services were heavily under pressure and had to drastically scale during the COVID-19 pandemic, and could have benefitted from cloud-based solutions.

At a high level, there are two types of architectures: monolithic and distributed. Monolithic (or layered, modular monolith, pipeline, and microkernel) architectures are not natively built for efficient scalability and elasticity — all the modules are contained within the main body of the application and, as a result, the entire application is deployed as a single whole. There are three types of distributed architectures: event-driven, microservices and space-based.

The simple healthcare application has a:

The hospital’s services are in high demand, and to support the growth, they need to scale the patient registration and appointment scheduling modules. This means they only need to scale the patient portal, not the physician or office portals. Let’s break down how this application can be built on each architecture.

Monolithic architecture

Tech-enabled startups, including in healthcare, often go with this traditional, unified model for software design because of the speed-to-market advantage. But it is not an optimal solution for businesses requiring scalability and elasticity. This is because there is a single integrated instance of the application and a centralized single database.

For application scaling, adding more instances of the application with load-balancing ends up scaling out the other two portals as well as the patient portal, even though the business doesn’t need that.

Most monolithic applications use a monolithic database — one of the most expensive cloud resources. Cloud costs grow exponentially with scale, and this arrangement is expensive, especially regarding maintenance time for development and operations engineers.

Another aspect that makes monolithic architectures unsuitable for supporting elasticity and scalability is the mean-time-to-startup (MTTS) — the time a new instance of the application takes to start. It usually takes several minutes because of the large scope of the application and database: Engineers must create the supporting functions, dependencies, objects, and connection pools and ensure security and connectivity to other services.

Event-driven architecture

Event-driven architecture is better suited than monolithic architecture for scaling and elasticity. For example, it publishes an event when something noticeable happens. That could look like shopping on an ecommerce site during a busy period, ordering an item, but then receiving an email saying it is out of stock. Asynchronous messaging and queues provide back-pressure when the front end is scaled without scaling the back end by queuing requests.

In this healthcare application case study, this distributed architecture would mean each module is its own event processor; there’s flexibility to distribute or share data across one or more modules. There’s some flexibility at an application and database level in terms of scale as services are no longer coupled.

Microservices architecture

This architecture views each service as a single-purpose service, giving businesses the ability to scale each service independently and avoid consuming valuable resources unnecessarily. For database scaling, the persistence layer can be designed and set up exclusively for each service for individual scaling.

Along with event-driven architecture, these architectures cost more in terms of cloud resources than monolithic architectures at low levels of usage. However, with increasing loads, multitenant implementations, and in cases where there are traffic bursts, they are more economical. The MTTS is also very efficient and can be measured in seconds due to fine-grained services.

However, with the sheer number of services and distributed nature, debugging may be harder and there may be higher maintenance costs if services aren’t fully automated.

Space-based architecture

This architecture is based on a principle called tuple-spaced processing — multiple parallel processors with shared memory. This architecture maximizes both scalability and elasticity at an application and database level.

All application interactions take place with the in-memory data grid. Calls to the grid are asynchronous, and event processors can scale independently. With database scaling, there is a background data writer that reads and updates the database. All insert, update or delete operations are sent to the data writer by the corresponding service and queued to be picked up.

MTTS is extremely fast, usually taking a few milliseconds, as all data interactions are with in-memory data. However, all services must connect to the broker, and the initial cache load must be created with a data reader.

In this digital age, companies want to increase or decrease IT resources as needed to meet changing demands. The first step is moving from large monolithic systems to distributed architecture to gain a competitive edge — this is what Netflix, Lyft, Uber and Google have done. However, the choice of which architecture is subjective, and decisions must be taken based on the capability of developers, mean load, peak load, budgetary constraints and business-growth goals.

Sashank is a serial entrepreneur with a keen interest in innovation.

DataDecisionMakers

Welcome to the VentureBeat community!

DataDecisionMakers is where experts, including the technical people doing data work, can share data-related insights and innovation.

If you want to read about cutting-edge ideas and up-to-date information, best practices, and the future of data and data tech, join us at DataDecisionMakers.

You might even consider contributing an article of your own!

Read More From DataDecisionMakers