We currently generate about 2.5 quintillion (million trillion) bytes of data worldwide each day. In just four days, the total number of bytes generated is roughly equivalent to the entire population of insects on Earth. On the receiving end of much of this data are businesses of all shapes and sizes. Their futures as businesses depend on how they convert collected data into trusted business intelligence that's successfully applied and monetized.

"[U]nlocking business value from all data is paramount," wrote David Stodder, TDWI senior research director for business intelligence, in a Q3 2021 Pulse Report. "People need the means to explore, analyze, visualize and share data insights easily and creatively so they can address changing circumstances and make informed decisions."

The ability to harness, analyze and monetize the daily rush of data into corporate coffers rests with artificial intelligence -- big data's great equalizer. Machine learning models can yield outcomes that influence every aspect of an enterprise's operations from finance to product development to customer buying patterns. Yet businesses grapple with advancing machine learning projects beyond the pilot stage, thus slowing or sabotaging their efforts to deploy AI models in a timely fashion.

"Due to the headaches that AI deployments create, organizations are viewing the time it takes to get a gold model into production as an opportunistic area of improvement," reported Enterprise Strategy Group (ESG), a division of TechTarget. "With the speed at which data changes in a modern and dynamic business, organizations are increasingly feeling that it is unacceptable to take nearly a month to operationalize AI."

In this video, Cognilytica's Kathleen Walch and Ron Schmelzer address the headwinds businesses encounter in machine learning project pilots, all of which can lead to costly delays in model deployment. It all starts with asking the right questions about business visibility and application, data quality and quantity, infrastructure and execution, staffing and expertise, and vendor and product selection. The answers to these questions will determine whether the machine learning project is a go or no-go.

Transcript

Kathleen Walch: Hello everybody, and welcome to this webinar, "How to move your machine learning project past pilot." This is going to be an overview of the methodology for doing AI projects, right, we're going to talk about, you know, why use AI at all and then some pitfalls to avoid. This is presented by Cognilytica analysts Kathleen Walch and Ron Schmelzer.

So, a little bit about Cognilytica in case you're unfamiliar with us: Cognilytica is an AI- and cognitive technology-focused research advisory and education firm. We produce market research, advisory and guidance on artificial intelligence, machine learning and cognitive technology. We also produce the popular AI Today podcast. We've been doing it for about four years, so you might have heard us there. We also have an infographic series, white paper and other popular content as well on our website. We're focused on enterprise and public sector adoption of AI, and we also are contributing writers to both Forbes and TechTarget.

Ron Schmelzer: Yeah. So hopefully, you've read a lot of our articles on the topics of AI and machine learning. And the goal here for us now is to help you, if you're working with the machine learning project, move past some of the struggles you might be having in making those machine learning projects a reality. So, let's move on now to our next slide here.

And I think one of the biggest roadblocks for a lot of folks who are trying to move their machine learning projects forward is making sure that they're solving the right problem. A lot of times, you know, one of the most fundamental issues with AI is that people are trying to apply AI to a problem that it's really not very well suited for. And one of the things we can look at as we go, what is AI really, really well suited for? One thing that we talk about in our research are these seven patterns of AI because the problem with AI is it is a little bit of a general term. And the challenge is when two different people are talking about AI, they may not be talking about the same thing.

In general, without going into detail, we have these patterns. You could have AI systems that are good at classifying or identifying systems -- the recognition pattern -- or using natural language processing to do conversational systems. Do you take advantage of big data and find both patterns in that big data, or anomalies in the big data, or help you make better predictions with predictive analytics? We can also have machine learning systems that can help us do things that humans would otherwise do with autonomous systems. Or, maybe find the optimal solution to a puzzle or a game or something like that, or some scenario, which is called goal-driven systems. Or, we could actually have our AI machine learning systems chew through enormous amounts of data to help create a profile of an individual. What all these share in common is that we're using data to derive insights. And, because of that, it's not like we're writing rules and so we're using probability, we're using statistics. And if we can't write a rule for a system but we need the machine to do something, then it's a good signal that AI machine learning might be a good solution for that. If it's probabilistic, which is what machine learning systems are, then we should use a learning model. That's machine learning.

Walch: Right. So, it's important to understand when to use AI, and it's also important to understand what it's not suited for. So if you have a repetitive, deterministic automation task, don't use artificial intelligence and machine learning. If you have formulaic analytics, then go ahead and do that. Also, systems that require 100% accuracy. Because it's probabilistic and not deterministic, you can never get 100% accuracy -- and if that's what you require, artificial intelligence and machine learning is not the right tool for this. Situations with very little training data -- you know, the question always is how much training data do I really need? And we say it depends on which pattern you're trying to do. But in general, if you don't have a lot of training data -- you know, it's very, very minimal -- probably not a good fit for AI and cognitive technologies. Also, situations where hiring a person just may be easier, cheaper and faster. You know, it takes time to build these systems, you can't just grab it and start using it. So, if it's going to be a small project, or something where just hiring a human is easier, that might be a better solution. And also don't do AI just to do AI because it's a cool factor or a buzzword, people are talking about it. Make sure that it's actually providing value and being used in the right situation. So as Ron mentioned earlier, if it's probabilistic, go ahead with AI; if it's deterministic, use a programming approach instead.

Schmelzer: Right. So now, I know many of you might argue with some of these points and say, "Wait a second, aren't we trying to build AI systems that can use a small amount of training data, maybe no training data, the vision of what's called zero-shot learning -- or even the use of cloud-based systems that have a huge model that we can maybe retrain or extend using transfer learning, some of these cloud vision stuff?" The answer is, yes, it is true; although some of those points are actually starting to go away, which means that we are broadening the reach of where AI and machine learning can be applied to situations with lower training data, or situations where maybe we have a human doing a task and maybe the AI system now, just, the cost and complexity has gone down considerably.

The only reason why we mention that is because sometimes those issues are a factor. And they become a factor when you look at something called the AI go/no-go decision, which is something that actually Intel and others have popularized and is part of a methodology for doing AI machine learning projects well. In which case, you should ask yourself these questions -- and these are the questions that will help you identify whether AI machine learning projects are even possible, given the problems you're trying to solve. One, do you have a problem definition that's even clear? Do you know what problem you're trying to solve? If not, that's kind of like the biggest no-go, right? You have people in your organization who are willing to change whatever they're doing now? If the answer is no, then there's no point in building a proof of concept and you can't even make the pilot a reality. And then, of course, the issue is will this even have any impact? Those are business visibility questions.

Then we have these data questions, which goes back to some of the points that Kathleen was talking about earlier. Do we have even data that measures what we care about? Even if it's a small amount, does it even measure what we want? Do we have enough? Well, you know, while we may want to do zero-shot and maybe few-shot learning, that's not possible in every scenario. It might be possible in situations where we have a large pretrained model, like computer vision, but it might not be possible for predictive analytics and patterns and anomalies, where a small -- you're not gonna want to detect a pattern, when you only have five or six examples of that pattern. It all depends on the pattern you're trying to solve. Finally, of course, we have data quality issues. You know, garbage in is garbage out. That is definitely the case with machine learning. So, we have those problems. Those are all data issues.

And then on the execution side, the issue is can we even build the tech that we want? Do we have the technology infrastructure that we need? Do we have the machine learning development stack that we want? Can we even, if we build this model, can we even do it within the time that's required? One issue is it can take a ton of time to train, do we have? How about the model execution time? Is it very slow? These are questions we need to ask.

And of course, this last point is, can we use the model where we even want to use the model? Is it possible to use the model if we're gonna have to use it, like, on an edge device, or in a cloud or an on-premise environment? Can we even do that? If the answer to any of these questions is no, that actually either makes your project very difficult or almost impossible. If all the answers to these questions are yes, you can imagine them as being like a bank of traffic lights: If all the lights are green, then our project can go forward. You know, it doesn't solve all the problems, but it does let us know how we can move our project forward. And that's sort of key to this challenge.

Walch: And that's the thing that's really important is making sure that the correct team is in place, and that the correct roles are in and being utilized for the project as well. So we always, you know, say ask the question, "Is the right AI team in place?" And there's a few different areas that you can focus on. So, the business side, you know, do you want to have line of business available? Business analysts, solution architects, data scientists -- a lot of times, data scientists fall within that line of business. So, you know, do you have the right roles and skill sets in place there? Then, data science itself -- you know, do you have a data scientist on your team? Do you have a domain specialist? And then we talked about data issues and data quality issues. So, if necessary, do you have external labeling or contributors so that you can get your data -- especially in supervised learning that needs good, clean, well-labeled data. Do you have labeling, you know, solutions in place? We also talk about the data engineering role. Within this role, and you know, do you have data engineer, system engineers, a data team and also a cloud team in place that you're utilizing for this project and this team. And then operationalization. So, this is when you actually want to use the model in production. You may need app developers, system and cloud administrators. So, these are all different roles that are required to make your AI project team a success. And you need to talk about this and say, you know, do I need every single role? Do I have positions? And, you know, do I have these in general so that I can actually apply them when needed?

Schmelzer: Yeah, and I think sort of the challenge with this is that you may or may not as an organization be, you might be a small company. You might be just a handful of people in your organization, or you could be a very large organization. And you might think of this as "oh my goodness, I have to hire all these people." And the answer is, well, you don't necessarily need to hire these as individuals. They just need to exist as roles, and if you don't have these as roles in the organization, it does make it much more challenging to get past the pilot project phase, which is what this webinar is all about.

Now, yes, there are companies building tools that are democratizing, as it were, data science to put it in the hands of more people. There are tools that are helping with data engineering and making that a much more realistic task to do with a small number of people. And there are things happening on the operationalization side, too, with this evolving space of MLOps and ML management, ML governance. And, but I think the point is that you have to make sure that this is addressed somehow. It's either addressed with a person, it's addressed with a role or it's addressed with a tool. If it's not addressed with any of those things, and you have a bunch of folks who want to make stuff happen but very few people who can make it happen, then you will find that you will run into this roadblock.

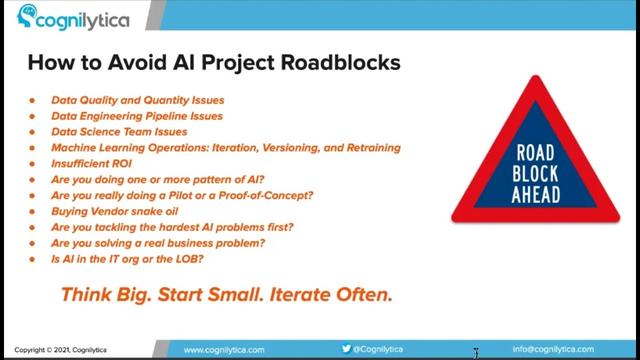

So, part of the way to avoid these roadblocks is, of course, like the big mantra -- which is "think big, start small and iterate often." But that basically relates to each one of these particular AI project challenges, right? And we can kind of go through them. And we've actually been talking about some of them throughout this webinar -- part of which is that if you have data quality issues and you have data quantity issues, that is one potential roadblock, and you need to figure out, "Do I need to solve all of it at once? Or can I solve a little bit of it?" That's again, apply "think big, start small and iterate often" to each one of these bullets. And maybe you can start with a smaller problem that requires smaller data that requires a smaller number of steps that you can use to address data quality issues.

Walch: Right. There's also data engineering pipeline issues and data science team issues. You know, we talked about do you have the right roles in place? And if you don't, then, you know, think about how can you get that. You know, is there another solution that I can get? Can I hire somebody for this role? Can I train somebody in this role? And if the answer is no, then that may be an issue, that may be a roadblock that you have.

Schmelzer: Same thing with operations, we can think about the things we need to do with operations. How am I going to version my model? How am I going to iterate my model? Have I built a retraining pipeline? If the answer is no, then can I -- instead of addressing it again, biting off more than I can chew -- can I iterate? Start with one small model; version that one model; figure out how to retrain that one model, a small model; then move to two models or a larger model. You know, we can do it that way. This is how we address the roadblock. Same thing with ROI -- if they're saying, "Look, I'm not, you know, I'm not gonna invest X million dollars in this huge solution, which I have no idea what the ROI is. Can I start small? Is there a way I can start with a smaller project with a smaller ROI and iterate to a better solution?" Same thing with the patterns, you know. Maybe I'm trying to, you know, boil the ocean here and do three or four or five patterns of AI all at once -- a conversational recognition system that does predictive analytics and pattern and anomaly detection in an autonomous way. That might be a very, very difficult thing to do. So, can I split this project into smaller phases and maybe just tackle the conversational part, just tackle the recognition part or something else, and then build that up over time?

I think the next thing that is of concern is there is a difference between a pilot and a proof of concept, right? Sometimes they're used interchangeably, but they shouldn't. A proof of concept is, can I just experiment with this technology? Can I even do even what I want to do? You know, is it, is it like, you know, do I have the specific skills? You know, I'm experimenting with this thing, can I build like a so-called toy project just to see if it works? Whereas a pilot is supposed to be a real problem in a real environment with real data with real problems. And I think, I think if you can address those issues -- again, we could start, we could think big, start small and iterate often -- with a small pilot that really is going to be useful, not some sort of thing that's not even going to be useful. Right?

Walch: Right. Another thing that we've seen is, you know, don't buy vendor snake oil. So, there's a lot of marketing hype and spin and excitement around some of these companies, and some tools and offerings that companies say they can provide. Try and avoid those, those pitfalls. Because that will be a roadblock. You know, if a company says that they can do, you know, five, 10, 15 different things and they really can't, make sure that you're understanding that. Also, are you tackling the hardest AI problems first? You know, Ron keeps saying -- and at Cognilytica, we keep saying -- think big. So, think about those hard problems, but then start small and iterate often. If you're tackling the hardest AI problems first, it should come as, you know, very little surprise that it's going to be an incredibly difficult project and most likely will fail. If you start small and you continue to iterate, you have a much better chance of success and that the project will continue to move forward. Also, are you solving a real business problem? You know, this goes back to also the ROI question as well. Are you solving an actual real business problem, or are you just building that little toy project that Ron talked about? And then, is it actually providing real ROI that's measurable and has an impact on the company?

Schmelzer: Yeah, and finally, you know, one other challenge you might face if you're hitting a pilot roadblock is where's the AI project even being run? Is it run within the IT organization, so treated like a technology thing? Or is it within the line of business, which is treated like a business thing? Of course, our perspective is that AI products are transformative and they should be part of the business. It's not an IT thing, even though there's a technology component. But just like you don't ask your IT organization to put together Excel spreadsheets and charts for your business -- that's usually part of whatever line of business, functions, sales, marketing, finance, operations, whatever -- same thing with AI. AI is not a function of technology; it is a function of the business role, and therefore, the business should be in charge of that and should own that.

So, one of the things that we talk about a lot at Cognilytica is there is a methodology for doing AI machine learning projects more successfully. It's called CPMAI -- cognitive project management for AI if you want to know what it stands for -- and it is based on a decades-old methodology called CRISP DM, which originally was focused just on data warehouse and data management, data mining projects, which is the DM part of CRISP DM. And it's basically an iterative methodology for starting with the business understanding but then going through these other phases of data understanding: data preparation, data modeling, model evaluation, and then finally, model operationalization. And what CPMAI does is it adds the AI-specific requirements about model development and model evaluation. And, see, CRISP DM doesn't really talk about model operationalization. So, that's a completely new thing. And the other thing that CPMAI does is it brings in Agile methodology, which really was not very popular when CRISP DM first came out. And that is the accepted methodology, which is can we do two-week sprints for AI projects where we can actually accomplish something really useful? An actual machine learning project in a short sprint? The answer is of course you can, and that's what CPMAI methodology is all about. It's about achieving success by doing what Agile has proven -- which is, again, think big, start small and iterate often. And that's what this methodology is all about.

Walch: Right. So thank you, everybody, for joining us for this presentation. And if you have any questions, you can always reach out to us at Cognilytica. Our information is below.